Website performance with ProcessWire, nginx and fastcgi_cache

I love ProcessWire. It's straight forward, it's easy to understand, and it's fast. And it plays well with nginx, my favourite alternative to the Apache webserver. Sometimes though, this is still not fast enough.

nginx and fastcgi

Much has been said about nginx ("Engine X"), and I won't repeat all that here. nginx is a web server with a small memory footprint, but very fast when it comes to delivering static assets. There is a sample config to make ProcessWire work with nginx here.

The more interesting thing is, that there is no mod_php as you're used to from apache. Instead, nginx works with the Fast CGI interface.

Basically, your interpreter communicates with the web server through either sockets or TCP connections. This enables you to let your application run separately from your web server. This can also be on a different machine, or you could choose to use multiple Fast CGI backends (which has various advantages, but comes with other problems, but more of this when the time has come). Your web server works as a kind of proxy to your application.

That is basically, what this code block in the nginx config is about:

location ~ .php$ {

# Check if the requested PHP file actually exists for security

try_files $uri = 404;

# Fix for server variables that behave differently under nginx/php-fpm than typically expected

fastcgi_split_path_info ^(.+\.php)(/.+)$;

# Set environment variables

include fastcgi_params;

fastcgi_param PATH_INFO $fastcgi_path_info;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

# Pass request to php-fpm fastcgi socket

fastcgi_pass unix:/var/run/example.com_fpm.sock;

}

In /etc/php5/fpm/pool.d/www.conf you set up, whether you want to have php running via socket or TCP with this directive:

listen = unix:/var/run/example.com_fpm.sock; or listen = 127.0.0.1:9000;

Change your fastcgi_pass directive accordingly.

Here is a brief overview of what you want to choose, tl;dr: usually it won't matter.

There are also a lot of other options described in the manual.

Caching

In ProcessWire, you have two options of caching, TemplateCache and MarkupCache. These are well documented and come with their own advantages and disadvantages. This blog post gives a good summary.

However, when you need even more performance, there is the ProCache module. It creates static pages from your site and serves these instead, thus reducing the server load in magnitudes.

There are still two drawbacks to it.

- It costs money.

- It requires .htaccess

1. It costs money

Come on! It's only $39! Ryan not only invented ProcessWire, he thought of this caching solution which – from all I heard – works magnificently. If you run ProcessWire on Apache, read no further: I encourage you to buy ProCache instead. If your site is important enough to need ProCache, there should be a way to afford it. Seriously.

2. It requires .htaccess

This is an issue when you work with nginx (as we want to do now). nginx does not parse or respect any .htaccess (Read here, why it does not and why it is good it does not). You could add all this directives programmatically in your nginx config, but … you don't want that. Instead, we have something as powerful:

fastcgi_cache

Enter fastcgi_cache. You should have it installed, it comes with current versions of nginx. fastcgi_cache takes the response of your application backend, and - according to a specified set of rules - writes this response to a file which will be delivered statically as long as it is valid without ever touching PHP/MySQL. Remember that nginx is performing great in serving assets? There you go…

How can I set it up?

Outside the server{ } block, you define the cache.

fastcgi_cache_path /tmp/nginx/cache levels=1:2 keys_zone=MYSITE:10m inactive=60m; fastcgi_cache_key "$scheme$request_method$host$request_uri"; add_header X-Fastcgi-Cache $upstream_cache_status;

You set the path (/tmp/nginx/cache or wherever you want it to be), the zone (MYSITE), the size of the zone (10 Megabytes), and the time the cache is valid. The third is a header which will show us if the cache is working or not.

Now what about levels? This defines the cache hierarchy. A string is generated from the URI scheme you provide (more on that in a minute), hashed with MD5 and then from the back of the resulting hash the caching directory is being built. From the manual:

For example, in the following configurationfastcgi_cache_path /data/nginx/cache levels=1:2 keys_zone=one:10m;file names in a cache will look like this:/data/nginx/cache/c/29/b7f54b2df7773722d382f4809d65029c

Now we need to enable the cache. In the

location ~ .php$ { } block, put

fastcgi_cache MYSITE; fastcgi_cache_valid 200 1m;

This enables the cache, stores it in the zone MYSITE we defined earlier, and every successful response (HTTP200) is valid for 1 minute.

You need to reload your nginx config now (nginx -t, then service nginx reload). When you load your site twice, you should see the X-Fastcgi-Cache header with a value of HIT. This means your cache is working.

Now what does it mean for the site?

STATS

I load tested a small site with the HTTP server benchmarking tool ab.

A command like this ab -c10 -n500 would produce the following results:

cache disabled

Concurrency Level: 10 Time taken for tests: 23.963 seconds Complete requests: 500 Failed requests: 0 Write errors: 0 Total transferred: 1808500 bytes HTML transferred: 1593500 bytes Requests per second: 20.87 [#/sec] (mean) Time per request: 479.253 [ms] (mean) Time per request: 47.925 [ms] (mean, across all concurrent requests) Transfer rate: 73.70 [Kbytes/sec] received

cache enabled

Concurrency Level: 10 Time taken for tests: 2.375 seconds Complete requests: 500 Failed requests: 0 Write errors: 0 Total transferred: 1808500 bytes HTML transferred: 1593500 bytes Requests per second: 210.54 [#/sec] (mean) Time per request: 47.497 [ms] (mean) Time per request: 4.750 [ms] (mean, across all concurrent requests) Transfer rate: 743.67 [Kbytes/sec] received

Note the 10x increase. This is ok, but is it impressive?

No, but yes. 10x is something, but honestly: we all expected a bit more, right? The improvement is even more visible, when you raise the concurrency. Try it yourself. The most I got out of this was a 100x improvement.

Yet, it's just a short spike, which the server can handle pretty well. But what happens, if many people come to your site over a longer term - maybe because your article on caching has made it to the top of a really heavily visited tech magazine?

There is a nice tool to simulate this: siege. You can install it on Ubuntu with apt-get install siege.

It [Siege] lets its user hit a web server with a configurable number of simulated web browsers. Those browsers place the server “under siege.”

I let siege run for 5 minutes with a concurrency of 50. This is some moderate traffic already. Note that with the above config, every minute a request will be made to ProcessWire to see if the page is still valid.

Before

Transactions: 5695 hits Availability: 100.00 % Elapsed time: 299.93 secs Data transferred: 11.18 MB Response time: 2.12 secs Transaction rate: 18.99 trans/sec Throughput: 0.04 MB/sec Concurrency: 40.18 Successful transactions: 5695 Failed transactions: 0 Longest transaction: 7.11 Shortest transaction: 0.22

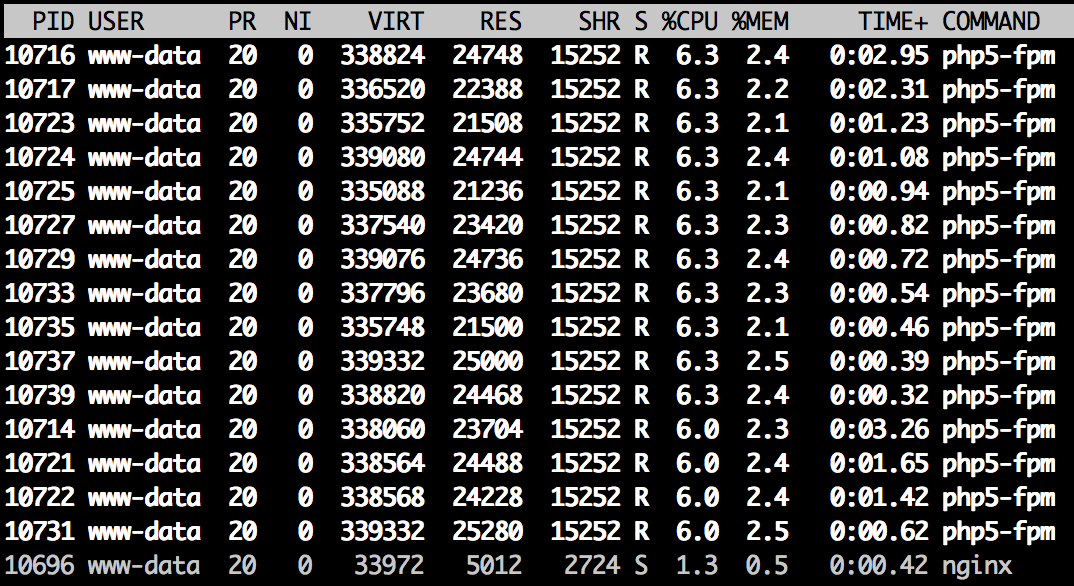

Letting siege run for 5 minutes gives you a nice impression of how it actually affects the server. Server load increases to impressive 15, and almost all your memory gets eaten up by PHP (depends on your config which is pretty much standard on this machine).

Here is the top output:

After

Transactions: 28730 hits Availability: 100.00 % Elapsed time: 299.60 secs Data transferred: 56.11 MB Response time: 0.02 secs Transaction rate: 95.89 trans/sec Throughput: 0.19 MB/sec Concurrency: 2.26 Successful transactions: 28730 Failed transactions: 0 Longest transaction: 0.24 Shortest transaction: 0.01

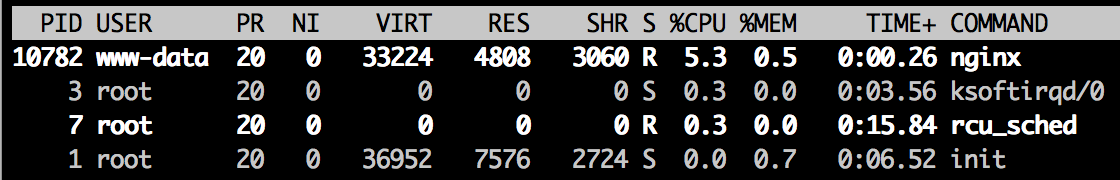

Oh, and here is the top output again:

We have a constant load of 0.07

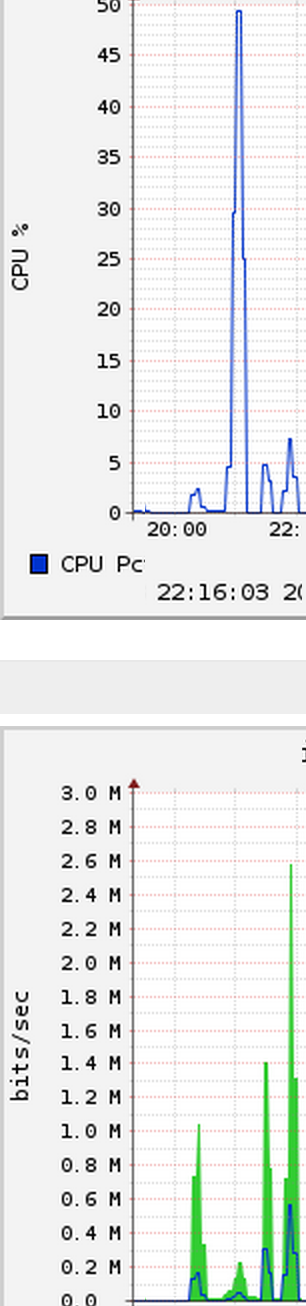

Please also compare these graphs, the upper is CPU usage, the lower network throughput:

We have more network throughput with significantly less CPU usage. Isn't that something?

Let's see what happens if we really have traffic? Siege is being run this time for five minutes with -c500. This is 10x as much as before. (This is the 3rd spike in above diagrams)

Transactions: 60640 hits Availability: 100.00 % Elapsed time: 299.19 secs Data transferred: 118.41 MB Response time: 1.93 secs Transaction rate: 202.68 trans/sec Throughput: 0.40 MB/sec Concurrency: 391.91 Successful transactions: 60640 Failed transactions: 3 Longest transaction: 30.24 Shortest transaction: 0.01

What you see here is that there are failed transactions already. This can be easily compensated by a longer cache lifetime. With this load, checking the backend every one minute might not be such a good idea, depending on the performance of your server. In this case, it's the second weakest machine available on Linode.

What I didn't measure is the memory consumption. Huge load on your backend usually comes with a lot of memory consumption. In serving static assets, nginx has a very low memory footprint compared to Apache. See these stats for example.

Not caching things - selectively

So well, your website is cached now. You might find yourself asking two things:

- How can I clear the cache?

- How the heck can I get into my backend?

For cleaning the cache, as of now just rm -rf all the things. In the above config, it would be rm -rf /tmp/nginx/cache/*. We will discuss a more sophisticated solution later.

For getting into your backend, it is necessary to do some work. We need to define exemptions. So what is it we wouldn't want to cache?

- Everything POST

- Everything behind

/processwire/

In our config, we first look up the server{ } block, and place this inside:

#Cache everything by default

set $no_cache 0;

#Don't cache POST requests

if ($request_method = POST)

{

set $no_cache 1;

}

#Don't cache if the URL contains a query string

if ($query_string != "")

{

set $no_cache 1;

}

#Don't cache the following URLs

if ($request_uri ~* "/(processwire/)")

{

set $no_cache 1;

}

#Don't cache if there is a cookie called wire_challenge (which means you're logged in)

if ($http_cookie = "wire_challenge")

{

set $no_cache 1;

}

To the location ~ .php$ { } block, we add the following:

fastcgi_cache_bypass $no_cache; fastcgi_no_cache $no_cache;

Again, test and reload your nginx config.

Mobile aware caching

We are doing responsive webdesign with server side components. This means, for example, to serve a reduced CSS to all mobile users. Maybe a distinct (and smaller) JavaScript file. Even less markup or less images. Anything that caters to the reduced bandwith you have to assume with mobile visitors.

In caching however, this creates a big challenge. The sites are generated, stored and then delivered. How can you distinguish between mobile and not mobile use? The page is already generated, so using cookies is not really an option. You might think about creating a "pre-site" that drops a cookie, but … let's be honest: caching is always added in a very late stage of the project, so this is also not an option.

So what can we do? If we look again through our config file, we notice this:

fastcgi_cache_key "$scheme$request_method$host$request_uri";

This means, that every key looks something like this:

httpsGETmydomain.com/sites/awesome/detail/

This is what the above mentioned MD5 string is created of. So here, we could add something.

Putting the server in server side

Web servers always know about the user agent. If we can detect the user agent, we can make an educated guess whether visitors are mobile or non-mobile users. Thankfully, nginx comes with something like that and due to the configuration language, we can do the following:

if ($http_user_agent ~* "(android|bb\d+|meego).+mobile|avantgo|bada\/|blackberry|blazer|compal|elaine|fennec|hiptop|iemobile|ip(hone|od)|iris|kindle|lge |maemo|midp|mmp|netfront|opera m(ob|in)i|palm( os)?|phone|p(ixi|re)\/|plucker|pocket|psp|series(4|6)0|symbian|treo|up\.(browser|link)|vodafone|wap|windows (ce|phone)|xda|xiino") {

set $mobile_cache_cookie "mobile=true";

}

if ($http_user_agent ~* "^(1207|6310|6590|3gso|4thp|50[1-6]i|770s|802s|a wa|abac|ac(er|oo|s\-)|ai(ko|rn)|al(av|ca|co)|amoi|an(ex|ny|yw)|aptu|ar(ch|go)|as(te|us)|attw|au(di|\-m|r |s )|avan|be(ck|ll|nq)|bi(lb|rd)|bl(ac|az)|br(e|v)w|bumb|bw\-(n|u)|c55\/|capi|ccwa|cdm\-|cell|chtm|cldc|cmd\-|co(mp|nd)|craw|da(it|ll|ng)|dbte|dc\-s|devi|dica|dmob|do(c|p)o|ds(12|\-d)|el(49|ai)|em(l2|ul)|er(ic|k0)|esl8|ez([4-7]0|os|wa|ze)|fetc|fly(\-|_)|g1 u|g560|gene|gf\-5|g\-mo|go(\.w|od)|gr(ad|un)|haie|hcit|hd\-(m|p|t)|hei\-|hi(pt|ta)|hp( i|ip)|hs\-c|ht(c(\-| |_|a|g|p|s|t)|tp)|hu(aw|tc)|i\-(20|go|ma)|i230|iac( |\-|\/)|ibro|idea|ig01|ikom|im1k|inno|ipaq|iris|ja(t|v)a|jbro|jemu|jigs|kddi|keji|kgt( |\/)|klon|kpt |kwc\-|kyo(c|k)|le(no|xi)|lg( g|\/(k|l|u)|50|54|\-[a-w])|libw|lynx|m1\-w|m3ga|m50\/|ma(te|ui|xo)|mc(01|21|ca)|m\-cr|me(rc|ri)|mi(o8|oa|ts)|mmef|mo(01|02|bi|de|do|t(\-| |o|v)|zz)|mt(50|p1|v )|mwbp|mywa|n10[0-2]|n20[2-3]|n30(0|2)|n50(0|2|5)|n7(0(0|1)|10)|ne((c|m)\-|on|tf|wf|wg|wt)|nok(6|i)|nzph|o2im|op(ti|wv)|oran|owg1|p800|pan(a|d|t)|pdxg|pg(13|\-([1-8]|c))|phil|pire|pl(ay|uc)|pn\-2|po(ck|rt|se)|prox|psio|pt\-g|qa\-a|qc(07|12|21|32|60|\-[2-7]|i\-)|qtek|r380|r600|raks|rim9|ro(ve|zo)|s55\/|sa(ge|ma|mm|ms|ny|va)|sc(01|h\-|oo|p\-)|sdk\/|se(c(\-|0|1)|47|mc|nd|ri)|sgh\-|shar|sie(\-|m)|sk\-0|sl(45|id)|sm(al|ar|b3|it|t5)|so(ft|ny)|sp(01|h\-|v\-|v )|sy(01|mb)|t2(18|50)|t6(00|10|18)|ta(gt|lk)|tcl\-|tdg\-|tel(i|m)|tim\-|t\-mo|to(pl|sh)|ts(70|m\-|m3|m5)|tx\-9|up(\.b|g1|si)|utst|v400|v750|veri|vi(rg|te)|vk(40|5[0-3]|\-v)|vm40|voda|vulc|vx(52|53|60|61|70|80|81|83|85|98)|w3c(\-| )|webc|whit|wi(g |nc|nw)|wmlb|wonu|x700|yas\-|your|zeto|zte\-)") {

set $mobile_cache_cookie "mobile=true";

}

and change the cache_scheme to

fastcgi_cache_key "$scheme$request_method$mobile_cache_cookie$host$request_uri";

Test and reload your configuration and you will see: it works.

Further advantages

fastcgi_use_stale

The directive fastcgi_cache_use_stale error timeout updating invalid_header http_500; (above your server { } block) tells nginx to serve the last valid version of your site in case your backend (ProcessWire) or the php-fpm process crashes. This is especially great if you update your CMS in the background.

fastcgi_cache_valid

You can use different cache lifetimes for different HTTP response codes. For example: HTTP 302 has a cache lifetime for 2 minutes, HTTP 200 for 60 minutes. For further explanation I suggest you read the manual

Please be aware, that additional No-Cache cookies can change the way your pages are being cached. Always check the headers ;)

So…

I suggest you try nginx if you haven't already, together with ProcessWire. The next issue will address a more comfortable way of purging the cache.

I am @gurkendoktor on Twitter, feel free to ask or add something. You could also join the conversation in the ProcessWire forum: https://processwire.com/talk/topic/8161-website-performance-with-processwire-nginx-and-fastcgi-cache/